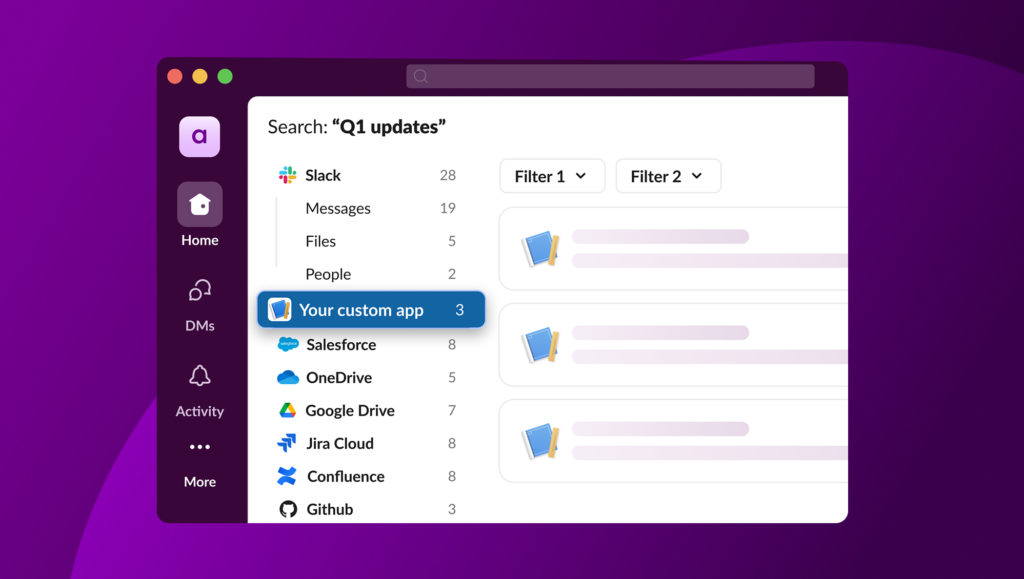

The explosion of Large Language Models (LLMs) has largely been associated with chat interfaces, but what if you could put an LLM to work right in the flow of your workday? Imagine leveraging AI to answer a coding question, generate a quick report, or even draft a reply—all without ever leaving Slack. The real power of AI in Slack isn’t just a new chat window; it’s the ability to integrate intelligent capabilities directly into the rhythm of your workday. Here are four ways to use events, shortcuts, slash commands, and modals to transform your app into a seamless partner, ready to assist whenever and wherever it’s needed.

1. Events

Slack has dozens of events you can choose to listen for and respond to, but one of the simplest illustrations of summoning an LLM with just one click is with a reacji event listener. Consider the scenario where you’re conversing with teammates in Slack, and a coding question arises for which nobody knows the answer. React to the question with an emoji (in this case, the :robot_face:), have your app listen for that event, then pass along the question to an LLM. The app can then respond to the question in thread. You can see this example’s code snippet in the documentation for the Events API.

2. Message shortcuts

If you want a similar, one-click experience that’s less about a reaction and more about a deliberate action, message shortcuts are the perfect choice. Similar to the previous example, you can use a message shortcut—that is, an option in the menu that appears when you click the three dots on a message in Slack—that will ask the message’s question to an LLM via your app. See an example for this on the Implementing shortcuts page.

3. Slash commands

For a more universal entry point, slash commands invoke an app from anywhere in Slack, simply by typing a slash character followed by the name of the command. In this code sample, the name of the command is /ask-code-assistant. It illustrates another way to ask the coding assistant app a question. Find this example in the documentation for Implementing slash commands.

4. Modals

For more structured, repeatable tasks, modals are a good solution when you’d like an LLM to carry out a task with predictable inputs. For example, say you have an app that uses an LLM to generate a product description based on a product’s name, key features, and target audience. You can use a modal to collect the necessary pieces of information, then send them to the LLM for analysis without the back-and-forth that tends to come with interacting with an AI chat. Keep the verbosity in the app code itself by providing the LLM detailed instructions in your code, then send those to the LLM with the user’s input. This example uses two parts: a slash command to kick off the modal and a view submission handler for dealing with the user input after it is received, as well as posting a response. Find this example in our Modals documentation.

By offering multiple entry points, you give your users a choice in how they interact with your app, whether it’s a quick reaction, a simple shortcut, a command, or a structured modal. This flexibility makes your app more intuitive and deeply woven into the daily rhythm of work.

Ready to start building? Check out our developer documentation to find the right entry point for your next project.